obscura

2022

A collaboration with Eden Harrison - Gizmo course Imperial College - Royal College of Arts.

This project explores the awkwardness that is the result of direct eye contact in public transportation. We developed glasses that detect the surrounding gaze direction and help the user to avoid direct eye contact. You can find a short clip here.

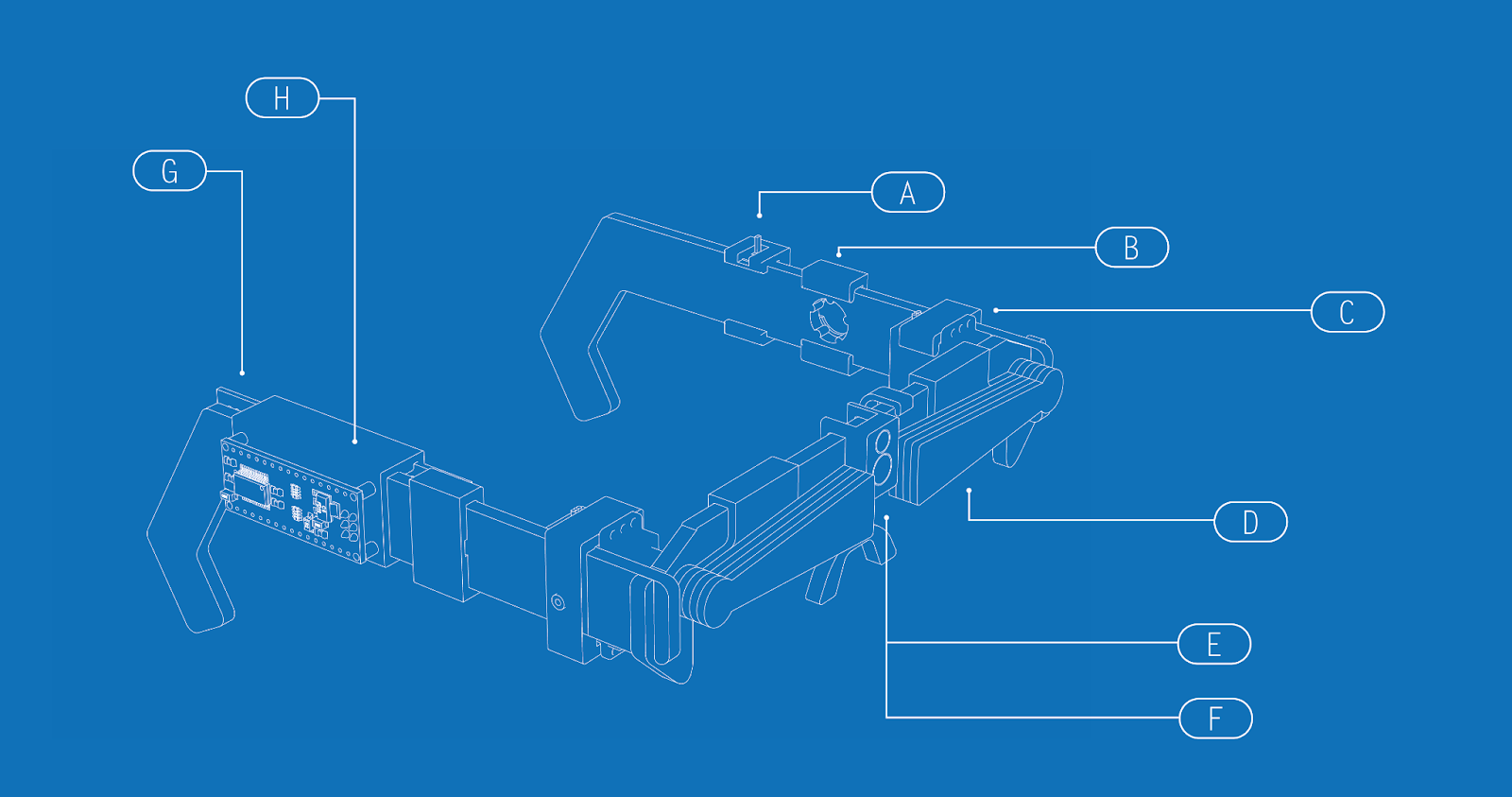

parts:

A. Switch: If on, the wings (D) will activate when someone’s gaze is directed at the camera (F) (in addition to the vibration motors (B) being active), avoiding the risk of eye contact. If off, only the vibration motors are active.

B. Vibration motors and clip: the clip holds the vibration motors in place.

C. Wings: these descend to prevent eye contact.

D. Servo motor: rotates to activate shades.

E. Camera. Sends a visual feed to the raspberry pi, for gaze detection.

F. Photoresistor. This feeds the light level back to the Arduino, and from there to the raspberry pi, in order to set the correct threshold for iris detection.

G. PCB board. This routes all voltage, ground, and signal cables to the arduino, for more efficient connections.

H. Arduino. This controls the shades/vibration motors in response to received commands from the raspberry pi.

design intent and function

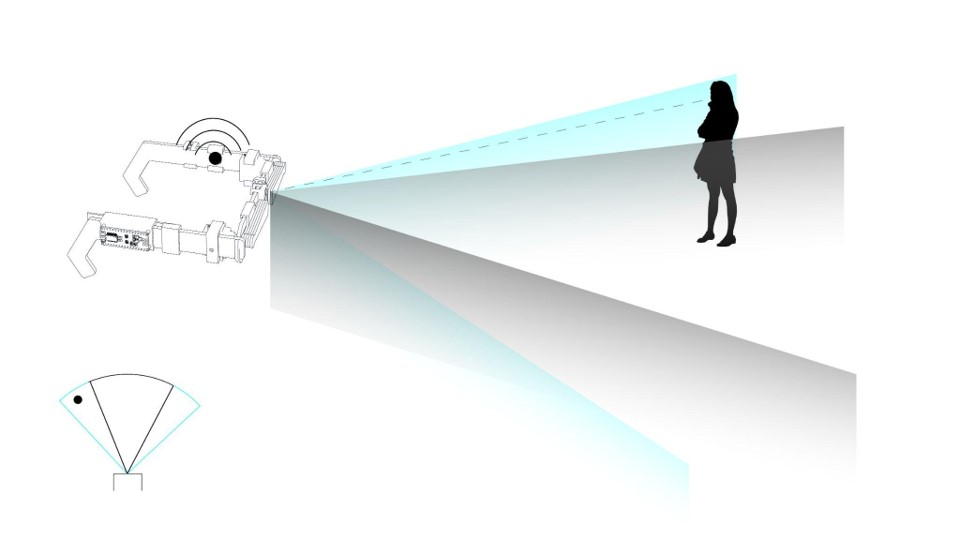

Function A:

If the perpetrator is located on the left-hand or right-hand side of the frame, the vibrating motor will buzz on the left or right, alerting the wearer to the attempt to make eye contact. The wearer may now use this information as they wish, to either avoid or engage with eye contact.

function A

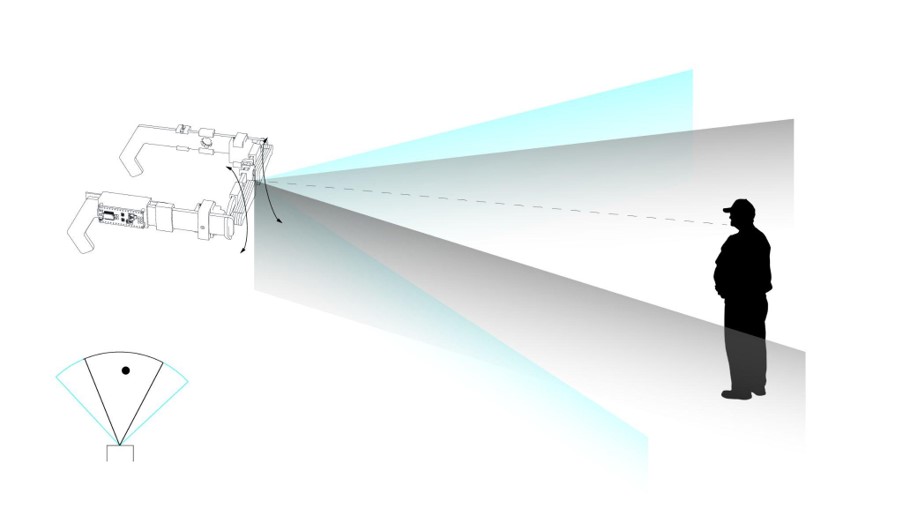

Function B:

If the perpetrator is located on the middle of the frame — and the ‘hide’ function of the glasses is engaged (accessed via the switch, see page 3) — the wings descend, obscuring direct eye contact from being made. The wings will lift after a pre-determined period of time (4 s). If the ‘hide’ function is not engaged, then nothing will happen.

function B

functionality

face detection:

A pre-trained face detector is used to locate each face in the frame. The detector locates 68 landmarks to describe each face.

iris detection:

For each face, the eyes are extracted by taking the relevant landmarks. The iris of each eye is detected by applying contour detection to the binary image of the eye (i.e., a gray scale image that has been converted to a binary image via thresholding). An ellipse is fitted to the contour of the iris to give the centre of the iris. The midpoint between each iris is calculated and a vector is drawn from a reference landmark (tip of the nose) to this midpoint for later use.

head pose estimation:

The head pose is estimated by mapping each of the 68 landmarks to the relevant 3D coordinates, taken from a 3D model of an average head. This allows the rotation and translation vector to be calculated via perspective n-point, i.e., using the camera image to estimate the position of a camera via a set of 3D coordinates, their reference 2D coordinates in the camera image, and a matrix of the camera’s intrinsic features.

pipeline

machine learning:

The iris midpoint vector, head rotation vector, and head translation vector are collected into a single vector, and used to classify the gaze as either directed toward camera’ or ‘not directed toward camera’ via SVM.

An SVM training method creates a model that classifies samples based on training data that has been labelled as belonging to one of two categories (‘looking at the camera’ and ‘not looking at the camera’). SVM maps the training data to points in space to maximize the width of the gap between the two categories. New examples are then mapped into that same space and predicted to belong to a category, based on which side of the gap they fall. A training data set of captured images was created and analyzed to extract the features, which were then used to train the SVM classifier. The classifier was exported as a ‘pickled’ object (i.e., a stream of bytes); this can then be run in the main block of code, and used to classify every face captured in real-time by the raspberry pi camera.

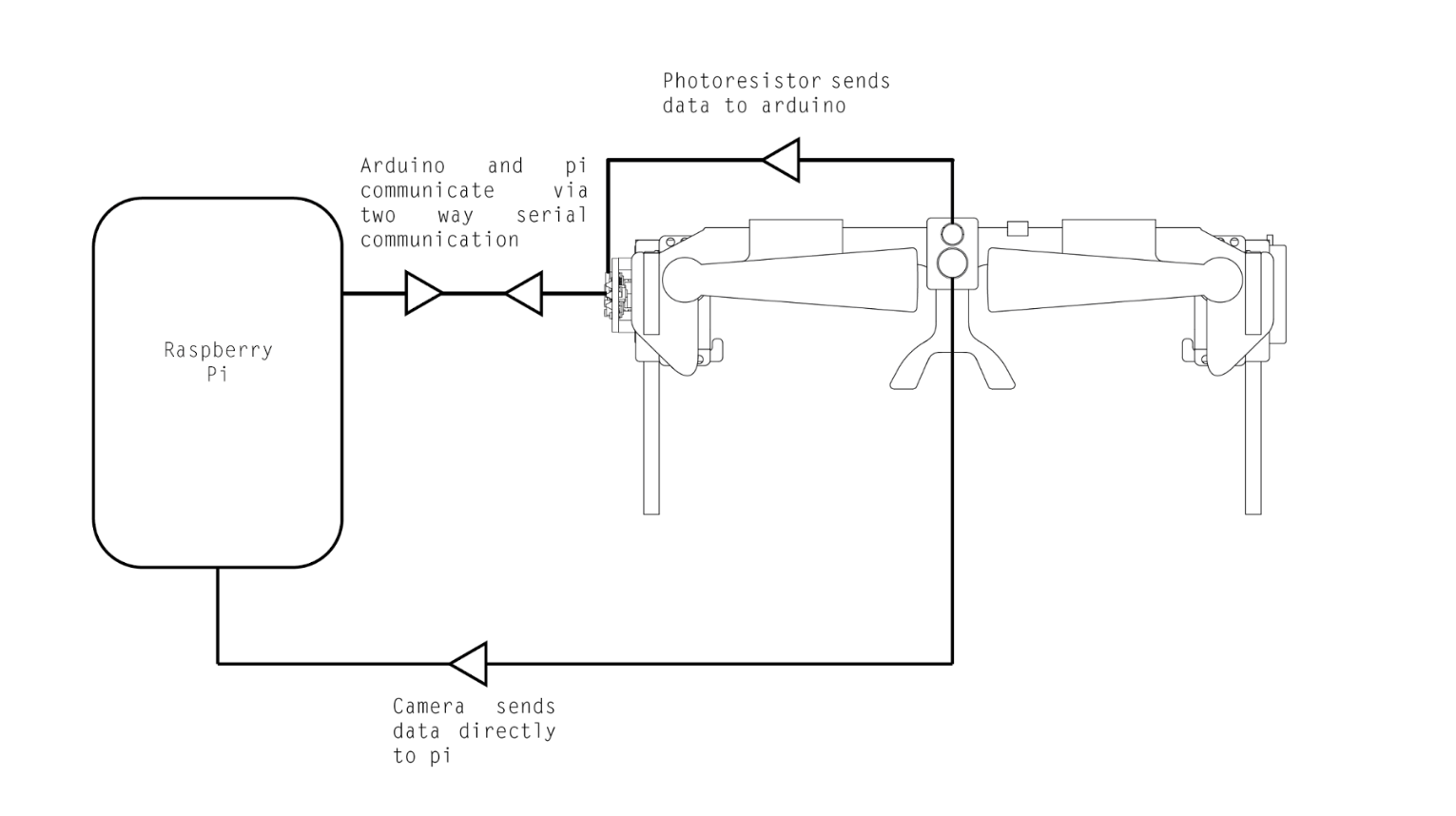

architecture:

Output from raspberry pi travels to Arduino via serial communication. Arduino receives commands from the raspberry pi and conducts a corresponding action.